TL;DR — Re-engineering VA Mail at Federal Scale

U.S. Department of Veterans Affairs’ $70.4 million “Paper-Mail Conversion”

We won the opening slice of a multi-year programme to digitize every piece of mail that the VA’s 400,000-strong workforce touches.

As Lead Product Designer & Business Analyst at iCONECT, I turned the VA’s multi-tier, paper-heavy routing maze into a no-code, rules-driven e-delivery service that bolts onto the existing iCONECT stack for OCR, AI analytics and metadata enrichment. I guided the end-to-end UX and workflow strategy—from gap analysis to prototype to pilot.

Early pilot on 1 700 users (~1 million packets/year) shows:

90 % faster “packet-to-first-action” → days to minutes

50 % fewer human hand-offs

Reviewer throughput jumped from 1–2 packets/day to one every few minutes

At full scale those gains translate into thousands of staff-years and millions of dollars saved annually—while giving Veterans dramatically quicker responses. (For context, FOIA alone logged 1.3 million government-wide requests last year, underscoring the backlog we’re shrinking.)

Setting the Stage - Volume & Variety

About 14 million packets/year break down into everything from disability-benefit claims (Form 21-526EZ) and prescription refills (VA Form 10-0426) to FOIA &

HIPAA requests that must be redacted before release, and much more..

Each packet could travel through a multi-tier maze before it can be resolved:

Sector (enterprise domain)

High-level case that could be long to different sector, such as Veterans Benefits, Veterans Health, National Cemeteries

Department (within a sector)

Each department (business, pharmacy, support) within the sector could have their own specialized workflow. Packets needs to have domain-specific rules, and create work orders

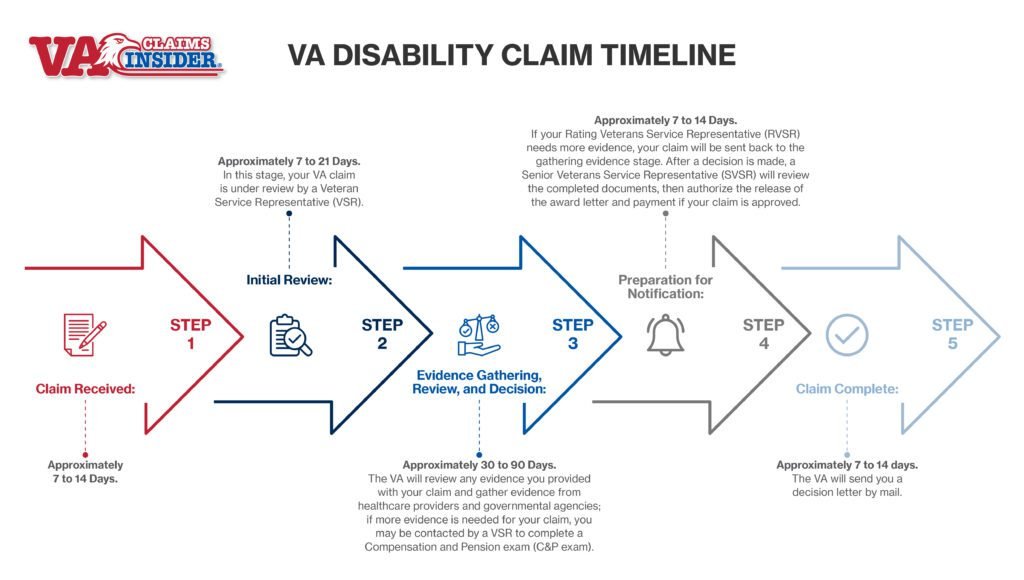

A “simple” five-step claim hides a thicket of hidden loops.

Every sector layers on its own business rules: Benefits can’t move forward until Health redacts PHI; Debt won’t release payment until QA signs off; Claims lacking supporting evidence pin-pong back to Support and then re-enter the analyst queue.*

The same packet can cycle through 3–6 departments, dozens of specialists, and multiple approvals, each with different SLAs and compliance checks.*

When those rules live in email chains and spreadsheets, status vanishes, deadlines slip, and Veterans wait weeks for answers. Our challenge was to surface—and codify—those invisible detours so any sector could model its rules (redact → approve → QA → archive → reopen) in clicks, not code. The goal: one shared rail that flexes to every department’s nuances while keeping every packet visible, owned, and moving.

Processing Layer (within department)

Depending on the request, packet could be re-routed, or specialized work, then approval by reviewer and quality control.

Pain-point and Workflow

Gap Analysis & Infrastructure Architecture

This gap analysis informed all subsequent design: by standardizing folder-taxonomy and metadata across the enterprise, we created the scaffolding for automated workflows.

New metadata rails - Introduced Packet Status and Responsible Party fields to make every hand-off explicit-similar to adding key fields in a case-management system.

Lifecycle labelling - Defined a clear status from Pending Review → Review → Ready -so anyone can see, filter, and eventually automate where each packet sits in its journey.

Manual-assignment console - Shipped an override panel that lets supervisors manually assign packets to the right department or reviewer-delivering a baseline control the VA to award the contract while the full automation rolled out.

We began by studying the existing workflow and VA's folder infrastructure. Interviews and shadowing with FOlA specialists revealed a "packets" workflow where documents hand off between offices. We found the existing folder hierarchy could mirror the VA's departmental structure, but critical fields were missing. For example, there was no "User Assigned" or "Packet Status" metadata - so packets had no lifecycle labels. We proposed and developed new metadata fields and status labels to each folder. This enabled true lifecycle tracking: now each packet could carry owner, status, and action-date information. In effect, folders became both visual groupings and logic triggers: we could reuse existing taxonomies (e.g., CHAMPVA › PO BOX 469063, General incoming) as routing lanes, without rebuilding.

Routing Logic and Business Rules

Think as Zapier - meets - Monday.com

Traditionally, staff manually sorted and routed documents to the correct office or queue, which was time-consuming and error-prone.

I began by mapping the entire mail intake process end-to-end, from letter arrival to digital onboarding. We created journey maps and a detailed service blueprint covering every touchpoint (mail clerks, scanning centres, FOIA officers, VA IT, prime contractors) and used RACI and stakeholder matrices to align tasks among VA, integrators and vendors.

We quickly introduced a no-code rule canvas called Doc Rule to automate this process. In Doc Rule, business users (e.g. mailroom supervisors or case managers) could chain together condition based rules that automatically tag documents, output metadata fields, and route packets based on content or context. This addressed a key business problem: orchestrating complex routing logic at enterprise scale.

When Status = Empty AND Form Classification = “10-10D”

Move packet → Folder: CHAMPVA 10-10D (so it lands in the correct work queue)

Notify owner → Queue Supervisor with the message “New health-care reimbursement application awaiting triage.”

This digital trigger chain cut cycle time dramatically – legacy workflows took weeks. whereas automated pipelines process mail in hours. We ensured each handoff was auditable, so managers can see who handled each packet.

Automation, designed for human in the loop

Think as Zapier - meets - Monday.com

After the setting up the basic infrastructure for data flow, we layered in AI-driven Action on top.

The concept is straightforward but powerful:

"When X happens, and Y conditions are met, then do Z."

But that logic extends with an Al action, layered an open-ended "Z" that can do almost anything without code.

The VA receives many document types (forms, letters, reports), and relying on fixed templates or manual data entry was a bottleneck. The AI-action lets users define page-specific prompts for a large language model (LLM), effectively teaching it what fields to extract on each document type. In the UI, a user can daisy chain the output of one field to be another. The system then runs the LLM on incoming pages of that type and auto-populates fields when confidence is high. This solved the problem of inconsistent or manual data capture – as HLP’s AI tools demonstrate, an AI that “pinpoints the most relevant data with unprecedented speed and accuracy” greatly improves efficiency . In our design, we made the AI process transparent: users see the suggested values and confidence scores, and can tweak prompts on the fly.