TL;DR — Re-engineering VA Mail at Federal Scale

U.S. Department of Veterans Affairs’ $70.4 million “Paper-Mail Conversion”

We won the opening slice of a multi-year programme to digitize every piece of mail that the VA’s 400,000-strong workforce touches.

As Lead Product Designer & Business Analyst at iCONECT, I turned the VA’s multi-tier, paper-heavy routing maze into a no-code, rules-driven e-delivery service that bolts onto the existing iCONECT stack for OCR, AI analytics and metadata enrichment. I guided the end-to-end UX and workflow strategy—from gap analysis to prototype to pilot.

Early pilot on 1 700 users (~1 million packets/year) shows:

90 % faster “packet-to-first-action” → days to minutes

50 % fewer human hand-offs

Reviewer throughput jumped from 1–2 packets/day to one every few minutes

At full scale those gains translate into thousands of staff-years and millions of dollars saved annually—while giving Veterans dramatically quicker responses. (For context, FOIA alone logged 1.3 million government-wide requests last year, underscoring the backlog we’re shrinking.)

Setting the Stage - Volume & Variety

About 14 million packets/year break down into everything from disability-benefit claims (Form 21-526EZ) and prescription refills (VA Form 10-0426) to FOIA &

HIPAA requests that must be redacted before release, and much more..

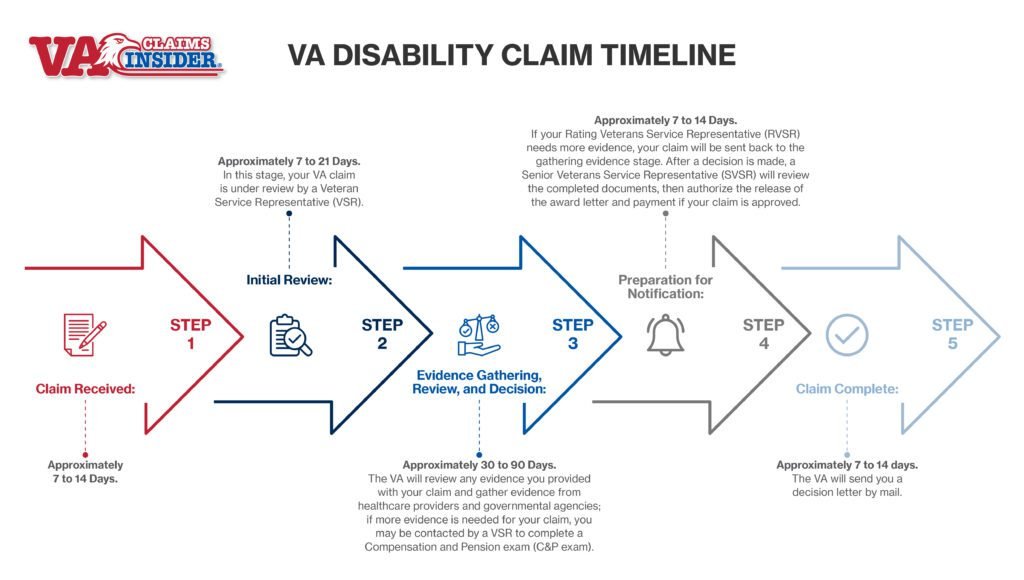

Each packet could travel through a multi-tier maze before it can be resolved:

Sector (enterprise domain)

High-level case that could be long to different sector, such as Veterans Benefits, Veterans Health, National Cemeteries

Department (within a sector)

Each department (business, pharmacy, support) within the sector could have their own specialized workflow. Packets needs to have domain-specific rules, and create work orders

A “simple” five-step claim hides a thicket of hidden loops.

Every sector layers on its own business rules: Benefits can’t move forward until Health redacts PHI; Debt won’t release payment until QA signs off; Claims lacking supporting evidence pin-pong back to Support and then re-enter the analyst queue.*

The same packet can cycle through 3–6 departments, dozens of specialists, and multiple approvals, each with different SLAs and compliance checks.*

When those rules live in email chains and spreadsheets, status vanishes, deadlines slip, and Veterans wait weeks for answers. Our challenge was to surface—and codify—those invisible detours so any sector could model its rules (redact → approve → QA → archive → reopen) in clicks, not code. The goal: one shared rail that flexes to every department’s nuances while keeping every packet visible, owned, and moving.

Processing Layer (within department)

Depending on the request, packet could be re-routed, or specialized work, then approval by reviewer and quality control.

Pain-point and Workflow

Gap Analysis & Infrastructure Architecture

This gap analysis informed all subsequent design: by standardizing folder-taxonomy and metadata across the enterprise, we created the scaffolding for automated workflows.

New metadata rails - Introduced Packet Status and Responsible Party fields to make every hand-off explicit-similar to adding key fields in a case-management system.

Lifecycle labelling - Defined a clear status from Pending Review → Review → Ready -so anyone can see, filter, and eventually automate where each packet sits in its journey.

Manual-assignment console - Shipped an override panel that lets supervisors manually assign packets to the right department or reviewer-delivering a baseline control the VA to award the contract while the full automation rolled out.

We began by studying the existing workflow and VA's folder infrastructure. Interviews and shadowing with FOlA specialists revealed a "packets" workflow where documents hand off between offices. We found the existing folder hierarchy could mirror the VA's departmental structure, but critical fields were missing. For example, there was no "User Assigned" or "Packet Status" metadata - so packets had no lifecycle labels. We proposed and developed new metadata fields and status labels to each folder. This enabled true lifecycle tracking: now each packet could carry owner, status, and action-date information. In effect, folders became both visual groupings and logic triggers: we could reuse existing taxonomies (e.g., CHAMPVA › PO BOX 469063, General incoming) as routing lanes, without rebuilding.

From Static Rails to a Living Workflow

Gap-analysis gave us the rails — Folder hierarchy + Packet Status + Responsible Party.

Next we had to set those rails in motion without drowning every department in custom code.

clerks walked cartons of paper from one office to the next and tracked status in spreadsheets. To replace that invisible choreography with software, I first mapped the entire mail-intake journey end-to-end—from post-mark to scanning centre, intake clerk, analyst, QA and archive.

Service blueprints, journey maps, and a full RACI matrix exposed every touch-point and every decision gate that stalled packets.

Design constraints & Requirements

Self-service: Every sector—Benefits, Health, National Cemeteries—revises its business rules as legislation, policy, or staffing changes. Waiting on two-week dev sprints would stall the whole programme.

Shared mental model: Clerks and analysts think in folders and fields, not scripts. Automations must read like plain English so supervisors can own and audit them.

Object oriented UX → Doc Rule Canvas

Borrowing from systems thinking and OOUX, we treated folders as objects, fields as states, and rules as verbs that move objects through states and quickly landed on a new feature - Doc Rule—a no-code canvas where a business user stitches WHEN → IF → THEN blocks exactly the way the service blueprint reads.

Example rule (straight from the blueprint)

WHEN Status = Empty AND Form Classification = “10-10D”

THEN Run AI Prompt “Classify Attachments” → write result to Form Classification

Move packet → Folder ‹ CHAMPVA / 10-10D ›

Notify → Queue Supervisor (“New reimbursement claim”)

AI - designed for human in the loop

Once Doc Rule could push packets through their life-cycle, we layered intelligence on top—not to replace analysts, but to present work and the right context.

Multi-model “first lens.”

Every newly-scanned page is run through a lightweight scan-quality model (is the image skewed? is OCR confidence < 85 %?). Pages that fail are flagged BadScan = Yes and branch to a “Rescan / Clean-up” queue—saving reviewers from deciphering soup-text later.Form & entity classification.

Clean pages flow to a Large Language Model. Using prompt groups that mirror the document set, the LLM:

– classifies the form (e.g., VA 10-10D)

– extracts anchor entities (Veteran SSN, Claim ID, Date of Service)

– returns a confidence score for each field.

All outputs are written to their own metadata fields (Form Name, Veteran SSN, Extraction Confidence).Confidence-aware routing.

Back in Doc Rule, those fields become pivots:WHEN Form Name = “10-10D” AND Extraction Confidence ≥ 0.90THEN Move → Folder CHAMPVA / 10-10D Notify → Queue Supervisor (“AI-classified, high confidence”)WHEN Extraction Confidence < 0.90THEN Move → QA / Low-Confidence Notify → Senior Analyst (“Review + correct”)Continuous feedback loop.

QA fixes are logged as GroundTruth = True; a nightly job retrains the model on any record where human and AI disagreed. In early pilots, that closed the precision gap by ~5 pp every two weeks without separate data-science sprints.Transparent controls for every department.

Because prompts, thresholds, and fall-back branches live in Doc Rule, Benefits can tighten PHI thresholds while Health loosens form detection—all without code. Analysts see highlighted suggestions inline, can accept or override with one click, and watch the confidence meter drop or rise in real time.

Why This Mattered

Scalable safety net. Even at 14 M pages a year, only the ambiguous 8–12 % reach humans first; the rest sail through with auditable confidence logs.

Domain agility. Each sector authors its own prompt sets (e.g., Pharmacy adds Controlled Substance checks) and tunes risk levels as policy shifts.

Design rationale. By exposing AI as just another verb in the When → If → Then grammar, we honored users’ mental model—folders and fields—while giving them machine speed. The result is an orchestration layer where AI and people pass the baton seamlessly, keeping every packet visible, accountable, and always moving forward.

Result & Reflect

The new system transformed VA’s processing workflow. Before launch, typical packet-to-action time was 2–3 days with 8–10 manual touches (multiple hand-offs and re-keying) for each case. After implementation, most packets were routed within 4 hours or less, often automatically routing in minutes. This order-of-magnitude speedup meant packets consistently beat the FOIA mandate (20 working days) by a wide margin. In user experience terms, staff went from juggling spreadsheets to clicking a streamlined dashboard.

Key outcomes:

- Turnaround time dropped from days to hours

- Manual touches were reduced by ~80% thanks to rule-based and AI-driven routing

- The platform initially served ~7,000 VA staff during pilot rollout; it is being scaled up to support all ~400,000 VHA employees

- This initiative is part of a broader ~$345M enterprise workflow modernization contract

As lead designer and business analyst at iCONECT, my mandate was to translate messy, cross-agency business rules into interfaces anyone could use with existing infrastructure. During the process, I ran walkthroughs with various stakeholder, iterating on clickable prototypes before a single sprint began, so every party could see how their corner of the workflow would play out on-screen. For phase 2, we are ensuring the platform scales smoothly to all 400,000 VA employees while keeping every packet visible, accountable, and advanced logic to ensure AI output are being cross-referenced to meet VA expectancy.